A/B Testing in Email Marketing: How to Optimize Your Campaigns for Better Results

You’ve got a fantastic product, compelling copy, and a subscriber list that’s ready to engage. You hit ‘send’ on your latest email campaign, lean back, and wait for the deluge of opens and conversions.

Except, the results are… lukewarm.

The subject line you thought was a slam dunk only pulled a 15% open rate. The Call-to-Action (CTA) button you meticulously designed is barely getting any clicks. It’s frustrating, isn't it? You’re pouring time and effort into your email marketing, but it feels like you're throwing darts in the dark.

The secret to transitioning from 'hoping for the best' to knowing what works lies in one fundamental, scientific process: A/B Testing, also known as split testing.

A/B testing isn't just a best practice; it is the backbone of a high-performing email strategy. It’s the engine that powers continuous improvement, turning assumptions into data-backed decisions and transforming average campaigns into revenue-generating machines.

In this ultimate guide, we’re going to demystify A/B testing in email marketing. We’ll cover the core mechanics, walk through exactly what you should be testing, outline the essential best practices for statistical significance, and discuss how modern platforms like Seamailer have made this powerful optimization tool accessible to every marketer.

Part 1: The Essential Core of Email A/B Testing

Before we dive into the nitty-gritty of subject lines and button colors, let’s solidify our understanding of what A/B testing actually is and why it's a non-negotiable tool in your email marketing arsenal.

What Exactly is A/B Testing?

Simply put, A/B testing is an experiment where you take two versions of a single variable, send them to equally sized, randomized subsets of your audience, and measure which version performs better against a specific goal.

- Version A (The Control): This is your baseline email, the original version you would have sent without testing.

- Version B (The Variant): This is the modified email, where only one element is changed from the Control.

- The Goal: A defined metric you want to improve, such as Open Rate, Click-Through Rate (CTR), or Conversion Rate.

The platform Seamailer (your Email Service Provider or ESP) distributes the emails, monitors the performance in real-time, and, after a statistically significant amount of data is collected, declares a ‘winner.’ This winning version is then typically sent to the remainder of your subscriber list.

Why You Can’t Afford to Skip Split Testing

Marketers often cite lack of time or resources as reasons for skipping A/B tests. This is a critical error. The time invested in a test is an investment that pays dividends for every future email.

- Eliminate the Guesswork: Your audience is unique. What works for a competitor might fail spectacularly for you. A/B testing provides objective data about your specific subscribers’ preferences. You might assume your list loves emojis in the subject line, but the data might tell a different story.

- Drive Incremental Gains (The Snowball Effect): One successful test might boost your open rate by 5%. The next test boosts your CTA click rate by 8%. These small, consistent wins compound over time. An initial 5% increase in open rate, combined with a later 5% increase in conversion rate, doesn’t just equal 10% more sales—it often results in a significantly higher overall improvement.

- Boost Your Bottom Line: Higher open rates mean more people see your offer. Higher click-through rates mean more traffic to your website. Higher conversion rates mean more sales. Every optimized element directly translates to a better ROI on your entire email program.

Part 2: The Core Variables – What Should You Test First?

Effective A/B testing begins with high-impact, low-effort changes. If a change is simple to implement but has a massive impact on your key metrics, it should be your priority.

Here are the most common and impactful variables, categorized by the primary metric they influence:

A. Variables that Impact Open Rate (The Inbox Envelope)

The primary goal of your ‘Inbox Envelope’ is to compel the subscriber to click open.

Variables and what to test

1. Subject Line- Under this, you test the Length (short vs. long), Tone (urgent vs. benefit-driven), Personalization (with name vs. without), Emojis (with vs. without), Question vs. Statement. For example a subject line that asks a direct question will generate a higher open rate than a plain statement.

2. Preheader Text- Summarizing the email content, extending the subject line's message, including a secondary CTA, or offering a sense of urgency/intrigue. Using the preheader to offer a direct, time-sensitive benefit will outperform a preheader that simply summarizes the body copy.

3. 'From' Name- Person's Name (e.g., 'Sarah from [Company]') vs. Company Name (e.g., '[Company] Marketing') vs. A specific Team (e.g., '[Company] Support'). Using a real person's name will increase the open rate as it feels more personal and less corporate.

4. Send Time / Day-Day of the week (Tuesday vs. Thursday), Time of day (8 AM vs. 2 PM, or local time zone optimization). Sending the email at 2 PM local time will result in higher opens than the morning 8 AM send time.

B. Variables that Impact Click-Through Rate (CTR) and Conversions (The Body)

Once the email is open, your goal shifts to engagement and action.

Variables and what to test

5. Call-to-Action (CTA)- Button color (high contrast vs. brand color), Button copy (e.g., 'Shop Now' vs. 'Get My 20% Discount'), Placement (top of the email vs. bottom). A CTA button with action-oriented, benefit-focused copy like 'Secure My Spot' will drive more clicks than generic copy like 'Read More'.

6. Imagery- Hero image type (product shot vs. lifestyle photo vs. illustration), Image size, Image placement (above the fold vs. below the fold). Using a lifestyle image of a person enjoying the product will result in a higher CTR than a static product-only image.

7. Copy Length & Tone- Short, punchy copy vs. longer, more detailed explanations, Formal tone vs. Casual/Conversational tone. A short, scannable email with bullet points will convert better than a long, detailed narrative for a product launch email.

8. Email Format/Design- Plain-text email vs. rich HTML email, Single-column vs. Multi-column layout, Mobile-first design elements. A minimalist, plain-text email will yield a higher click-to-open rate than a heavily-designed HTML template.

Part 3: The Scientific Method – Essential A/B Testing Best Practices

Running an A/B test is easy. Running a meaningful, statistically significant A/B test is a science. Neglecting the following principles will lead to unreliable data and flawed strategies.

1. The Golden Rule: Test Only One Variable at a Time

This is the most critical rule. If you change the subject line and the CTA button color simultaneously, and version B wins, you have no way of knowing which change was responsible. Was it the subject line, the button, or a synergistic effect?

Solution: Isolate your variables. If you want to test two different subject lines, keep everything else—preheader, sender name, body copy, CTA—identical.

2. Formulate a Clear Hypothesis (Why Are You Testing?)

Don't test just for the sake of it. Start with an educated guess. A strong hypothesis defines the variable, the expected outcome, and the reason for the change.

- Weak Test: "I’m testing a red button and a blue button."

- Strong Hypothesis: "We believe that changing the CTA button color from the brand's primary blue to a high-contrast red will increase the Click-Through Rate because the red color stands out more sharply against the white background, drawing the reader's eye."

This ensures your test is strategic and the results are actionable.

3. Ensure Statistical Significance and Sample Size

Results from a small test group can be misleading. If you send an A/B test to 100 people and get a 5-click difference, that difference could be due to pure chance.

- Statistical Significance: This refers to the probability that the difference in performance between A and B is not due to random chance. Most marketers aim for a 90% or 95% confidence level. Your email platform should calculate this for you. Never declare a winner until statistical significance is reached.

- Sample Size: Your test segments need to be large enough to reach that statistical threshold. For smaller lists (under 5,000), you might need to test on the entire list. For larger lists, aim to test on a minimum of 10-20% of your total segment, split equally between Version A and Version B.

4. Give the Test Enough Time to Run

The duration of your test is crucial.

- Subject Line Test: Can often be concluded in a shorter time (4 to 24 hours), as most opens occur shortly after sending. The winning subject line can then be sent to the remaining, non-tested segment.

- Content/CTA Test: Needs to run longer (up to 48-72 hours), as you are measuring actions deeper in the funnel (clicks and conversions) that people might take later in the day or the next morning.

Rushing a test will provide data that is not representative of your audience's typical behavior.

5. Document and Scale Your Learnings

An A/B test that produces a winner but is never documented or acted upon is a waste of time.

- Create a Central Repository: Log every test, the hypothesis, the winning variable, the final metrics, and the confidence level.

- Apply the Winner: Once a winner is declared, make that winning element (e.g., the subject line format, the CTA wording) the new Control for your next test. This is how you continually build a superior email strategy. Your goal is not to win the test—it's to establish a new, higher-performing baseline for your entire program.

Part 4: A/B Testing in the Modern Marketing Environment with Seamailer

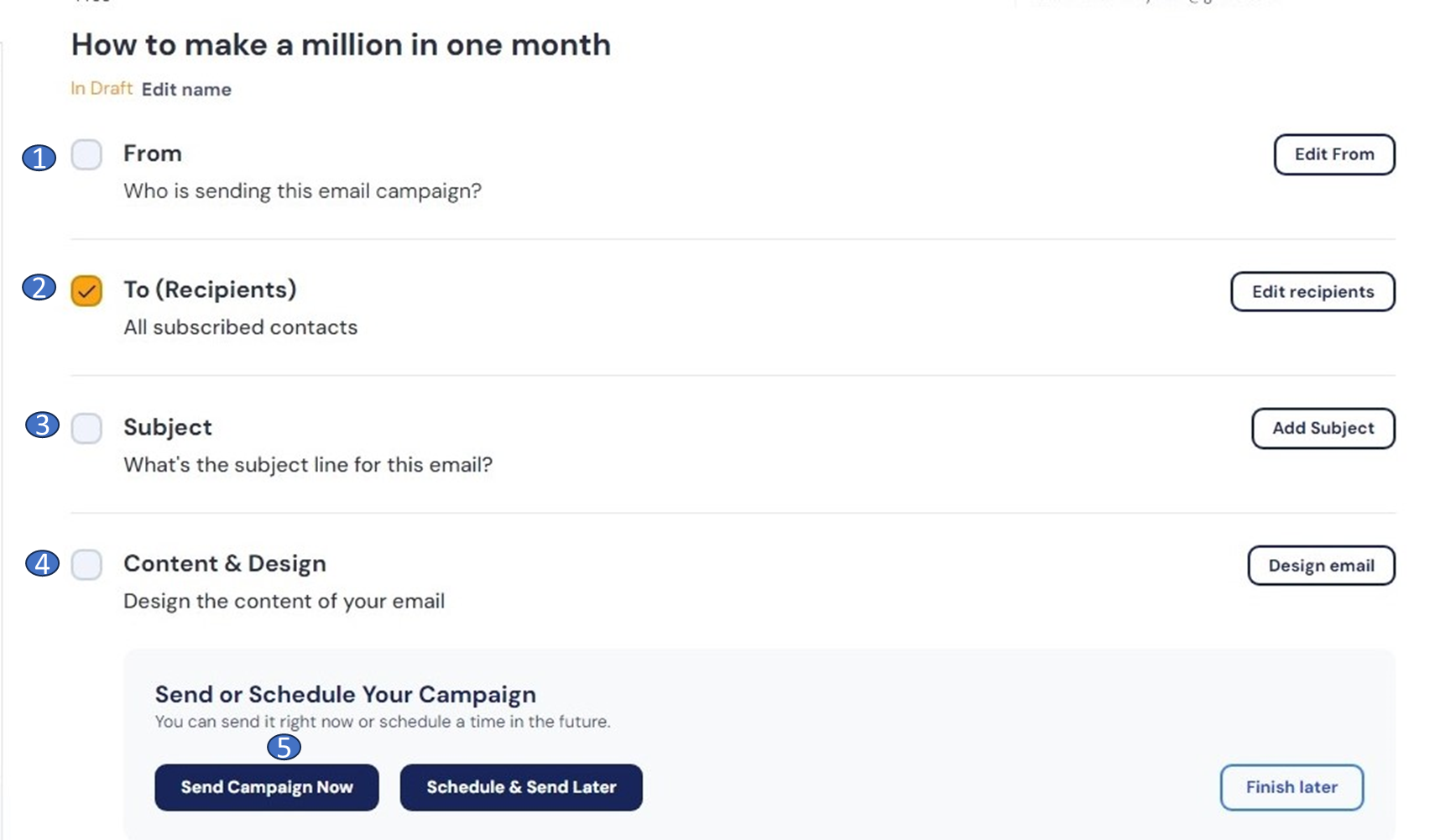

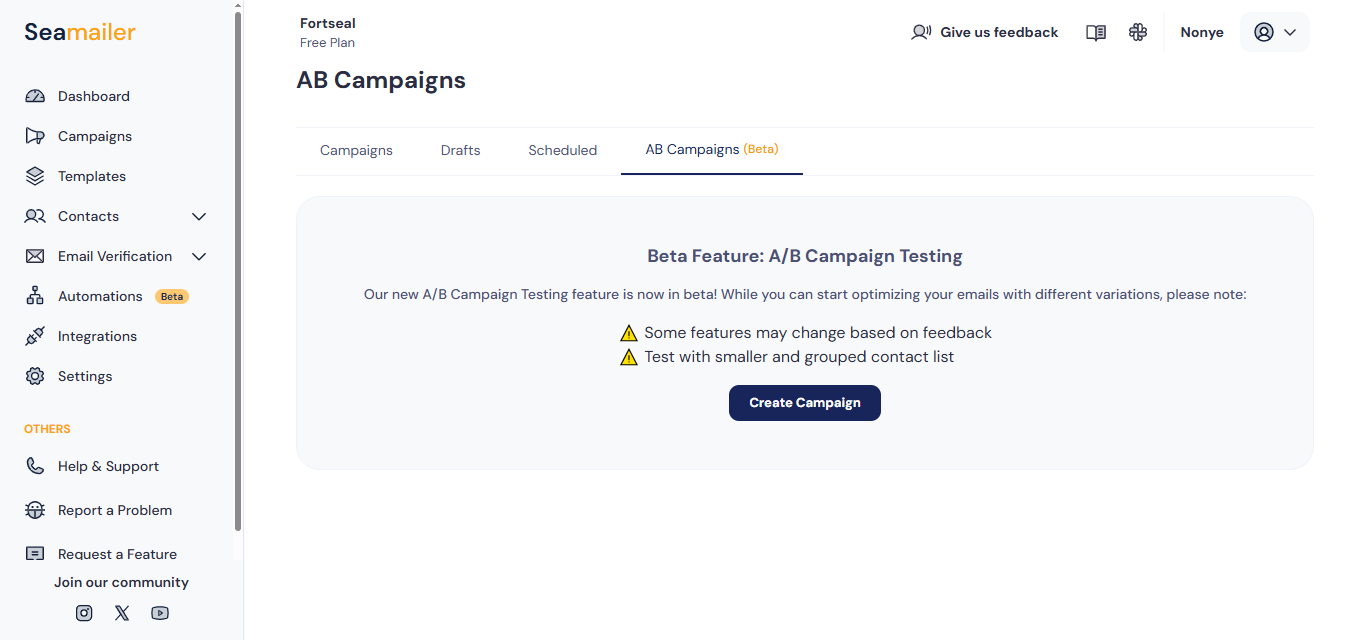

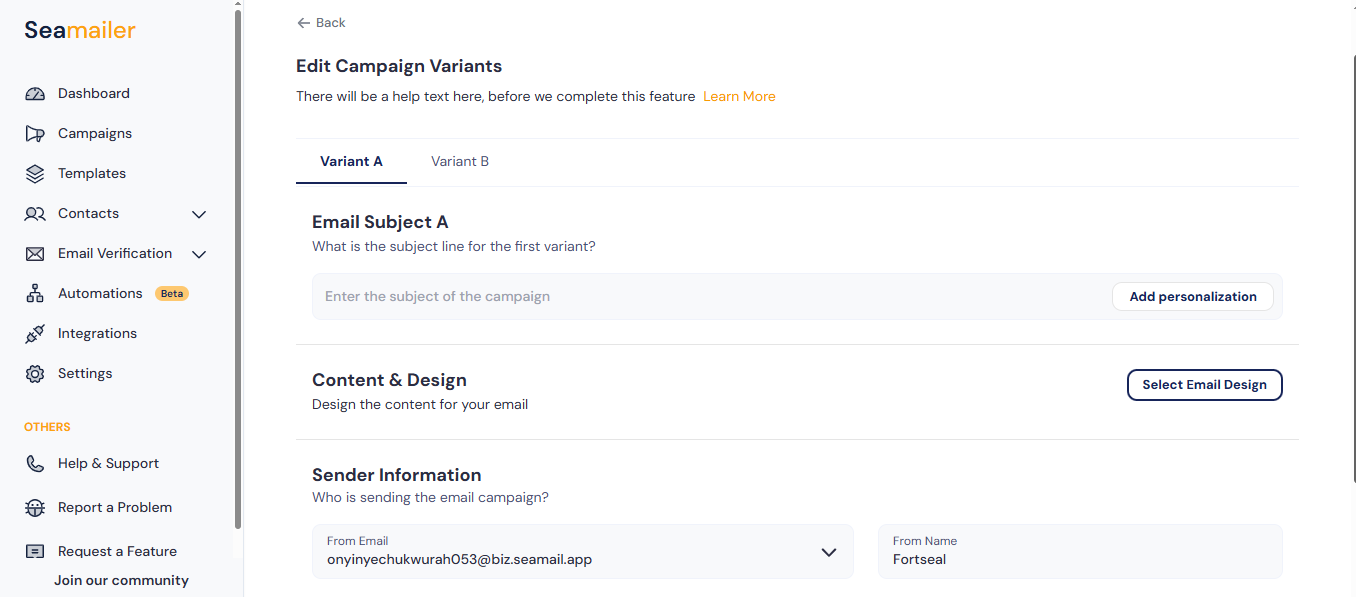

The process of segmenting lists, running tests, calculating statistical significance, and automatically sending the winner used to be a complicated, manual nightmare. Thankfully, modern Email Service Providers (ESPs) have transformed this into a seamless, automated process.

Platform like Seamailer have integrated powerful, user-friendly A/B testing features that make this advanced technique accessible to everyone.

How Seamailer Streamlines A/B Testing:

- Intuitive Test Setup: Seamailer's campaign builder allows you to select the variable you want to test (Subject Line, From Name, Email Content, Send Time) with a few clicks. You simply create your two versions, A and B, within the same interface.

- Automated Sample Sizing: You tell Seamailer your target list size, and the platform automatically handles the random, unbiased list segmentation. It will recommend a statistically sound sample size (e.g., 10% for Version A and 10% for Version B) based on your total list count.

- Smart Winner Declaration: This is where the magic happens. You set the success metric (Open Rate, Click-Through Rate, or Conversion Rate) and the duration (e.g., Run for 4 hours). Seamailer then monitors the data, and as soon as one version achieves the preset statistical significance level, it immediately—and automatically—sends that winning version to the remaining 80% of your list. No more manual calculations or midnight alarm clocks to send the winner.

- Advanced Segmentation Integration: Seamailer lets you run A/B tests within specific segments. Want to know if your male subscribers aged 25-34 respond better to an urgent tone than a playful tone? Seamailer lets you drill down and test that specific hypothesis, ensuring your future segment-specific campaigns are perfectly optimized.

Leveraging the automation within a tool like Seamailer means you can run more tests, learn faster, and achieve campaign optimization with less overhead.

Part 5: The Advanced A/B Testing Playbook

Once you’ve mastered the basics—subject lines and CTAs—it’s time to move on to high-level, strategic testing that can dramatically reshape your entire customer journey.

1. The Segmentation-Based Test

Don't assume a "winner" for one segment is a "winner" for all.

- Test: Send the same email but test different CTA copy for two distinct segments: Recent Buyers vs. Lapsed Subscribers.

- Recent Buyers CTA: "See What's New"

- Lapsed Subscribers CTA: "Welcome Back - Get 15% Off Your Next Order"

- Learning: You might find the Lapsed Subscribers respond only to a direct incentive, guiding you to make incentives a permanent part of your win-back automation.

2. The Automation and Flow Test

A/B testing isn't limited to a one-time broadcast. It’s arguably more important to test emails in your automated flows (Welcome Series, Abandoned Cart, Post-Purchase). These emails run 24/7 and, once optimized, are a source of passive, continuous revenue.

- Test: In your 3-email Abandoned Cart series, test the delay between the first and second email (e.g., 24 hours vs. 48 hours).

- Learning: You might discover that a 24-hour delay is too aggressive and generates more unsubscribes, while a 48-hour delay maximizes conversions, allowing you to optimize a core revenue-driving asset.

3. The Personalization Depth Test

We know personalization is powerful, but how much is too much?

- Test:

- Version A: Simple salutation using the customer's first name.

- Version B: Dynamic content blocks showing product recommendations based on their last purchase or browsing history.

- Learning: While dynamic content is harder to set up, if Version B converts significantly higher, the effort is justified. If Version A is sufficient, you save development time for future campaigns.

4. The Value Proposition Test

This is an advanced test focused on what you're offering, not just how you're offering it.

- Test:

- Version A: Focuses on Free Shipping as the main offer.

- Version B: Focuses on a 15% Discount as the main offer.

- Learning: This reveals a deep insight into your audience's perception of value. For low-cost items, free shipping often wins. For high-cost items, a percentage discount usually performs better. This learning should influence your entire promotional calendar.

Part 6: Overcoming Common A/B Testing Pitfalls

Even seasoned marketers stumble over common mistakes. Avoid these traps to keep your data clean and your strategy sound:

Pitfall #1: Testing Too Much

If your test groups are too small, your results will lack statistical significance. Always check your list size against an A/B test calculator or rely on the automated significance reports from a platform like Seamailer. Rule of Thumb: For a critical metric like CTR, aim for at least 1,000 to 2,000 recipients per variation.

Pitfall #2: Ending the Test Too Early

It's tempting to stop a test when one version is clearly ahead after just an hour. However, you need to let the test run for the entire duration you set (e.g., 24 hours). Opens and clicks can be heavily skewed by immediate activity; you need the full picture to capture all time zones and subscriber habits.

Pitfall #3: Forgetting Context

If you send out an A/B test during a major national holiday or immediately after a huge industry news event, your results will be skewed by external noise. Try to run tests during periods of relatively normal user behavior unless you are specifically testing holiday messaging.

Pitfall #4: Testing Low-Impact Elements

While you could A/B test a comma vs. a semi-colon, the impact on your bottom line will be negligible. Prioritize high-impact elements like Subject Lines, CTAs, and Core Offers. Focus your energy where the potential ROI is highest.

Conclusion: The Path to Perpetual Optimization

A/B testing in email marketing is not a one-and-done task; it’s a philosophy of perpetual optimization. It's the commitment to continuous learning, allowing data to be your compass and your audience's behavior your map.

Every ‘failed’ test is not a loss; it's a valuable data point that tells you what not to do, bringing you one step closer to what truly resonates.

By isolating variables, forming clear hypotheses, ensuring statistical significance, and leveraging the automation power of modern ESPs like Seamailer, you move past the uncertainty of guesswork. You establish a data-driven system that consistently refines your approach, translating directly into higher open rates, increased click-through rates, and ultimately, a healthier, more profitable email marketing channel.

Stop wondering what your audience wants. Start running the experiments that tell you exactly what they need. The campaign that generates the best results is one test away.